Introducing gpt-oss-safeguard: An open security reasoning model for developers

OpenAI has released gpt-oss-safeguard, a new innovation in its family of open-weight reasoning models specifically designed for safety classification tasks. It represents a significant leap forward in enabling developers to flexibly build, test, and deploy safety-specific systems. The model is available in two sizes, 120B and 20B, and is a modified version of the original gpt-oss, released under the Apache 2.0 license, making it freely available and customizable.

Both models are available for download today from Hugging Face, giving researchers and developers access to advanced security reasoning tools tailored to their organization's policies and standards.

A new perspective on safety reasoning

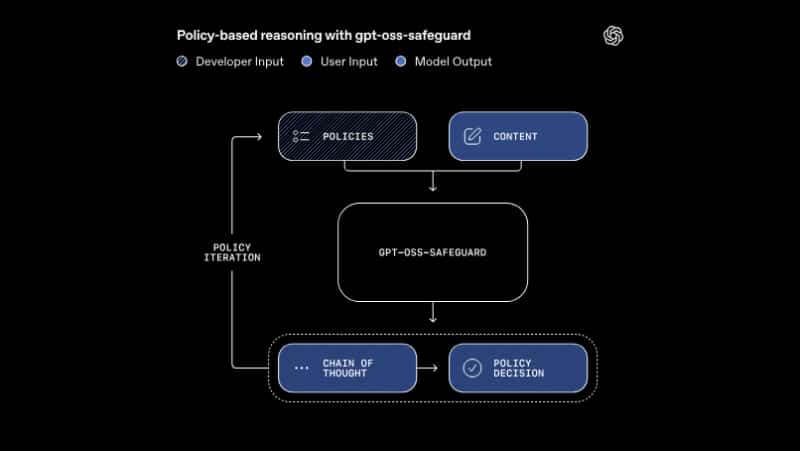

Unlike traditional classifiers that are trained on fixed data, gpt-oss-safeguard uses real-time reasoning based on developer-defined policies. This means that the model can dynamically classify messages, replies, or conversations based on developer-specific policies, providing a high degree of flexibility and transparency.

In the inference step, the model receives two pieces of information:

- Security Policy (as defined by the developer)

- Content to be evaluated

The model then produces outputs with a “reasoning chain” that can be audited and refined, helping developers better understand and trust the model's decision-making process.

This approach allows organizations to instantly adjust or extend the scope of their security policies without having to retrain entire classification models, increasing flexibility in responding to rapidly changing situations.

How gpt-oss-safeguard supports specialized security policies

With gpt-oss-safeguard, developers can design security solutions that fit their platform, such as:

- Game forums can be classified as discriminatory for discussions of cheating or inappropriate behavior.

- E-commerce platforms can detect fake reviews or misleading content.

- Social media can use community-specific moderation rules that change over time.

This reasoning mechanism is particularly useful in cases where:

- Dangerous behaviors are changing rapidly.

- The topic is too complex for a small model to understand.

- There is insufficient labeled data for training.

- We want decisions that are transparent and explainable rather than processing speed.

OpenAI's internal security rationale

OpenAI's internal system uses a similar method called Safety Reasoner, which works behind models like GPT-5, ChatGPT Agent, and Sora 2. This method is called deliberative alignment — a process where the AI model reasons directly about safety policies instead of relying solely on learned examples.

Safety Reasoner applies security rules in real time, using up to 16% of the system's total compute resources, enabling rapid adjustments to security boundaries as risks evolve, strengthening the platform's deep defenses.

For image and video models like Sora 2, the Safety Reasoner performs real-time safety checks at each step to identify and block unsafe outputs before they reach the user.

Performance and Evaluation

OpenAI evaluated gpt-oss-safeguard on both internal and public datasets, such as the 2022 ToxicChat and Moderation datasets.

The test results show that:

- gpt-oss-safeguard has better accuracy in multi-policy reasoning than gpt-5-thinking and its predecessor gpt-oss.

- When tested on screening and toxicity data, the model demonstrated better interpretability and adaptability than traditional classifiers in complex situations.

- Although the 20B version is smaller, it remains highly efficient for real-world content moderation use cases.

Limitations and exchanges

Although gpt-oss-safeguard is flexible and transparent, it has two limitations:

- Performance ceiling: Specialized classifiers trained on large, high-quality data may still be more accurate in some very complex domains.

- Compute cost: Reasoning classification is resource-intensive, making it unsuitable for systems that require high speed and high throughput.

OpenAI addresses this limitation by combining a fast, lightweight classifier with a Safety Reasoner to perform deeper analysis when needed.

Collaboration with the safety community

The release of gpt-oss-safeguard is part of OpenAI's commitment to open collaboration, and the model was developed and tested in collaboration with ROOST, SafetyKit, Tomoro, and Discord to meet the needs of developers in real-world scenarios.

Vinay Rao, CTO of ROOST, says this is the first open source reasoning model with a “bring your own policy” approach that allows organizations to define their own risks while maintaining transparency in the screening process.

ROOST also launched the ROOST Model Community (RMC) — a hub for researchers and developers to share knowledge, test security, and improve open source filtering together.

Getting Started with gpt-oss-safeguard

Developers can get started with gpt-oss-safeguard today by downloading the model from Hugging Face. With its reasoning-first architecture, users can:

- Try creating a dynamic security policy

- Analyze the rationale of the model to improve policy.

- Integrate open reasoning mechanisms with content moderation systems

This marks a significant step towards making AI security “open and customizable,” allowing developers to truly define and control the security standards of their own systems.

Summary

The launch of gpt-oss-safeguard marks a significant step forward in elevating the safety of open AI. By combining the power of reasoning and a flexible policy framework, OpenAI empowers developers to design platform-specific safeguards, whether for content moderation, trust & safety, or community enforcement. This model enables organizations to protect users with transparency, accountability, and adaptability in an ever-changing digital world.

Interested in Microsoft products and services? Send us a message here.

Explore our digital tools

If you are interested in implementing a knowledge management system in your organization, contact SeedKM for more information on enterprise knowledge management systems, or explore other products such as Jarviz for online timekeeping, OPTIMISTIC for workforce management. HRM-Payroll, Veracity for digital document signing, and CloudAccount for online accounting.

Read more articles about knowledge management systems and other management tools at Fusionsol Blog, IP Phone Blog, Chat Framework Blog, and OpenAI Blog.

New Gemini Tools For Educators: Empowering Teaching with AI

If you want to keep up with the latest trending technology and AI news every day, check out this website . . There are new updates every day to keep up with!

Fusionsol Blog in Vietnamese

- What is Microsoft 365?

- What is Copilot?What is Copilot?

- Sell Goods AI

- What is Power BI?

- What is Chatbot?

- Lưu trữ đám mây là gì?

Related Articles

Frequently Asked Questions (FAQ)

What is Microsoft Copilot?

Microsoft Copilot is an AI-powered assistant feature that helps you work within Microsoft 365 apps like Word, Excel, PowerPoint, Outlook, and Teams by summarizing, writing, analyzing, and organizing information.

Which apps does Copilot work with?

Copilot currently supports Microsoft Word, Excel, PowerPoint, Outlook, Teams, OneNote, and others in the Microsoft 365 family.

Do I need an internet connection to use Copilot?

An internet connection is required as Copilot works with cloud-based AI models to provide accurate and up-to-date results.

How can I use Copilot to help me write documents or emails?

Users can type commands like “summarize report in one paragraph” or “write formal email response to client” and Copilot will generate the message accordingly.

Is Copilot safe for personal data?

Yes, Copilot is designed with security and privacy in mind. User data is never used to train AI models, and access rights are strictly controlled.